Docker

Cloud CMS offers the option to run development and production installations of its software on-premise or within a virtual private cloud. This option is available to subscription customers and can be utilized in both a development and production capacity.

The actual installation and management of the various services involved in a full-scale production-ready Cloud CMS deployment is facilitated greatly through the use of Docker. Docker provides a way for all of the various tiers to be encapsulated into appropriate microservices. These microservices run within Docker containers that can be orchestrated together to deliver the full platform.

Cloud CMS delivers its platform for use on-premise and in virtual private clouds via Docker. Docker is an capable technology with which we've had a lot of success in terms of managing our own software as a service offering. In addition, Docker has seen fantastic adoption by major cloud providers including Amazon, Rackspace and others.

The Cloud CMS Docker images contain everything needed to run. Unlike traditional software, there is no need to download and then proceed through installation steps. Rather, Cloud CMS provides runtime configurations via Docker Compose that allow you to run a single command and go. Docker will download the Cloud CMS images for you, launch the containers and wire the containers together for you using Docker Compose.

We recommend the use of Docker Machine, Docker Swarm and the Docker AWS EC2 Engine for provisioning and running production hosted environments. These tools allow you to take advantage of Auto Scaling groups for production scale out. AWS has excellent Docker support that is improving every day.

Environments

Cloud CMS produces several Docker images that you may launch to construct the architecture for your own Cloud CMS environments.

The design of a given environment will vary depending on the needs. Production environments, for example, should be clustered to provide some assurance of fault tolerance and failover where as non-production environments may be smaller and more volatile in nature.

The same images can be used across all environments but may be configured differently upon being instantiated into containers. You can think of containers are "instantiated images" (with a configuration) where an image is essentially a template that can be stamped out using Docker to construct the container and bring it online.

Images

The following images are shipped as part of a Cloud CMS release:

- API Server (

cloudcms/api-server) - UI Server (

cloudcms/ui-server) - Virtual Server (

cloudcms/virtual-server) - Web Server (

cloudcms/webshot-server)

Kits

Cloud CMS also provides developer kits that we recommend you look to for examples of typical environments. The kits use Docker Compose to describe the environments and provide sample files to get you started.

Many of the kits make use of third-party Docker images that Cloud CMS does not produce or ship. These include the official images for MongoDB and ElasticSearch but also include HA Proxy, ZooKeeper and Redis.

- HA Proxy is used as a load balancer

- ZooKeeper is used to demonstrate automatic cluster configuration on non-AWS environments (such as Digital Ocean, Rackspace or purely on-premise)

- Redis is used as a backend provider for the Node cluster's distributed cache

Typical Environments and Architectures

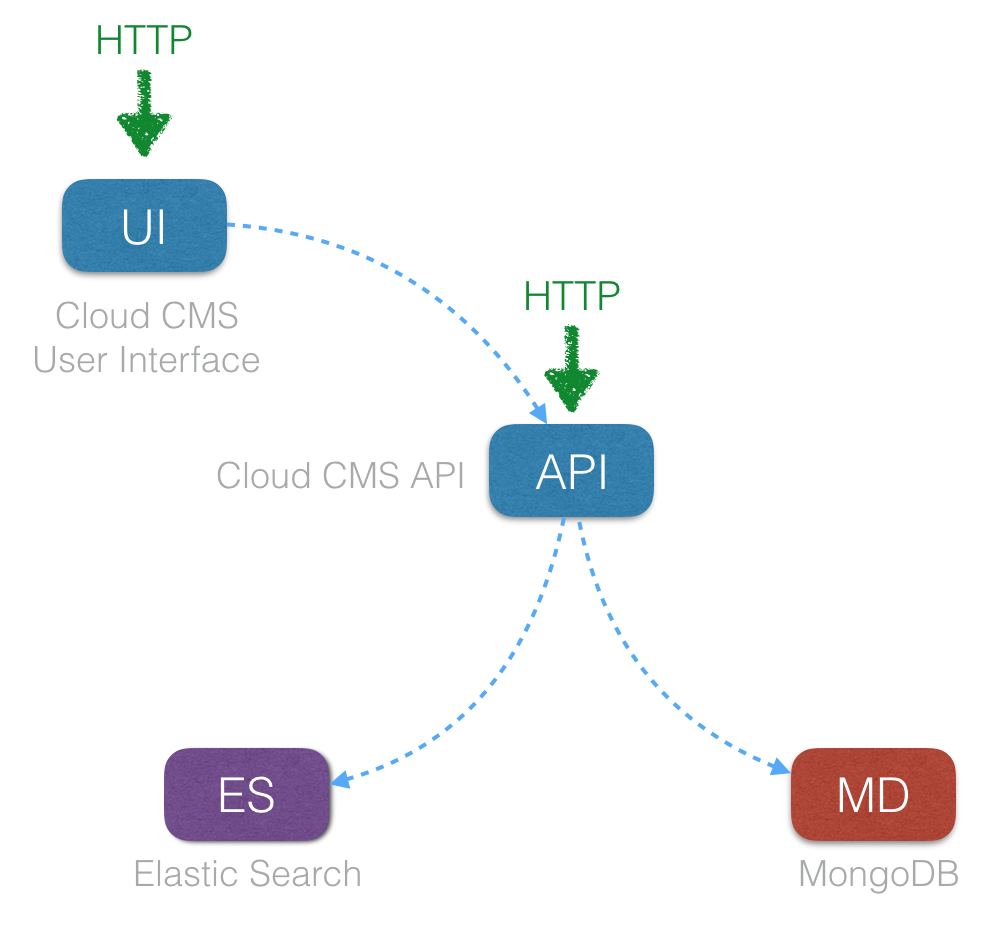

Simple

This is a non-production environment that you can launch on a laptop or a small server for development, staging, QA or other test purposes.

In addition, you may choose to launch Mongo DB and Elastic Seach as containers or you may have those servers hosted externally.

This environment allows developers to use the API and also log into the user interface to work with content and schemas within the graphical front-end.

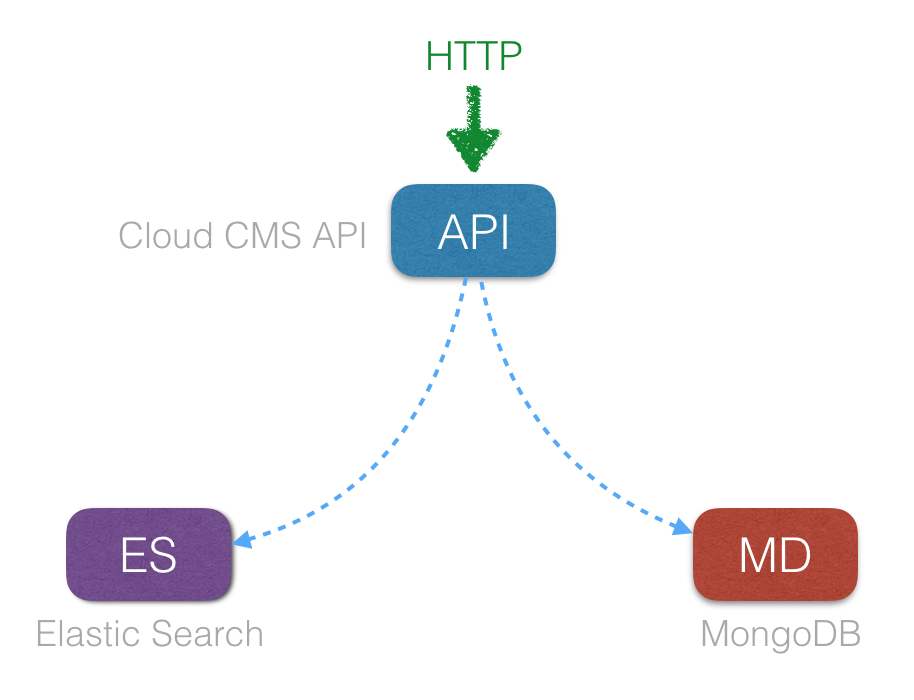

Simple (API Only)

This non-production environment is similar to the previous one but leaves off the user interface. This is common for scenarios where you solely wish to run operations against the Cloud CMS API (such as in an embedded case or perhaps as part of an application test runner).

In addition, you may choose to launch Mongo DB and Elastic Seach as containers or you may have those servers hosted externally.

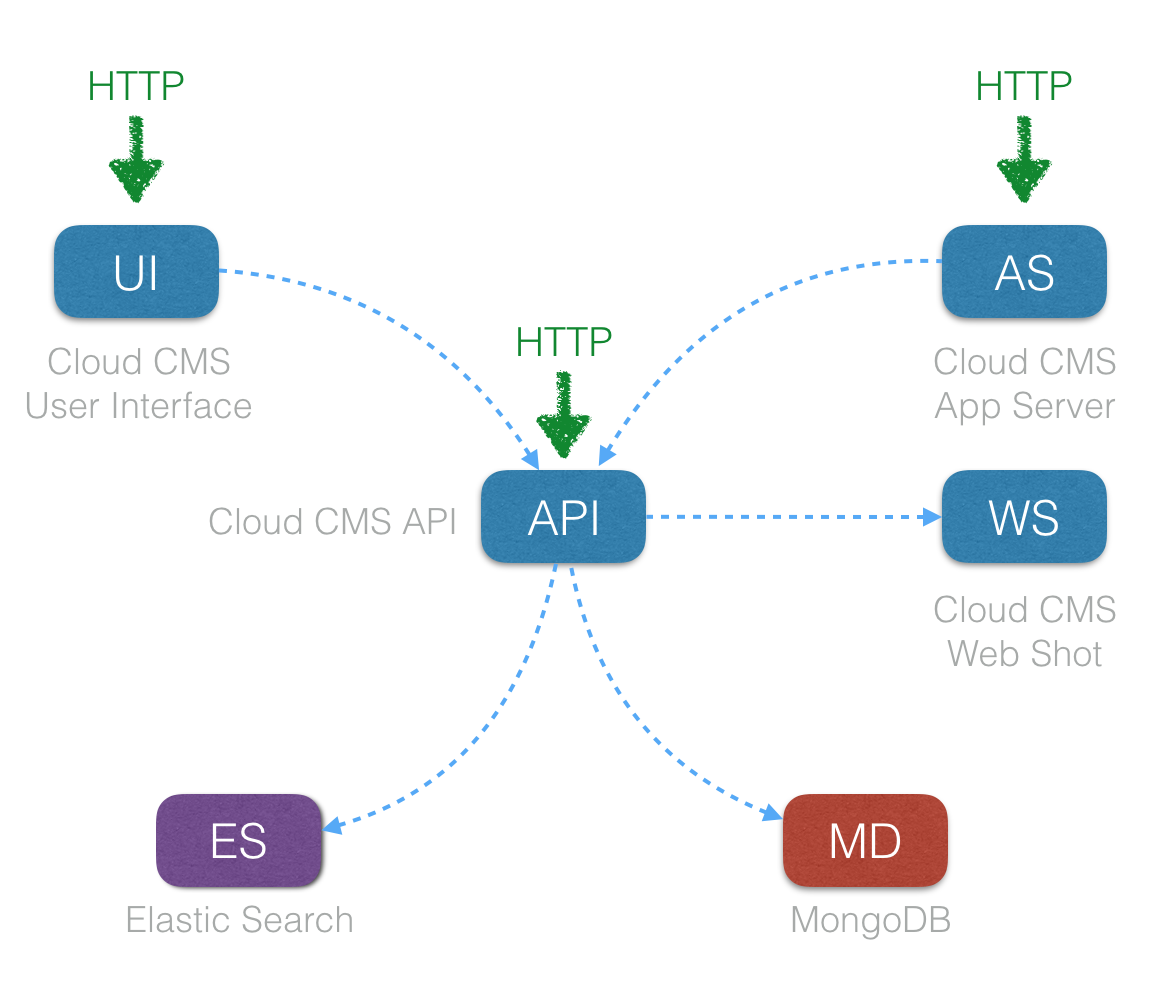

Simply (All Images)

This is also a non-production environment but demonstrates how additional Cloud CMS produced images hook into the architecture.

In addition, you may choose to launch Mongo DB and Elastic Seach as containers or you may have those servers hosted externally.

The cloudcms-appserver image provides a runtime with Node.js and other OS dependencies pre-installed so that your custom Node applications can be launched straight away. These custom Node applications can utilize the functionality of the cloudcms-server node module to produce web sites with static caching, CDN compatibility, web content management page generation and more.

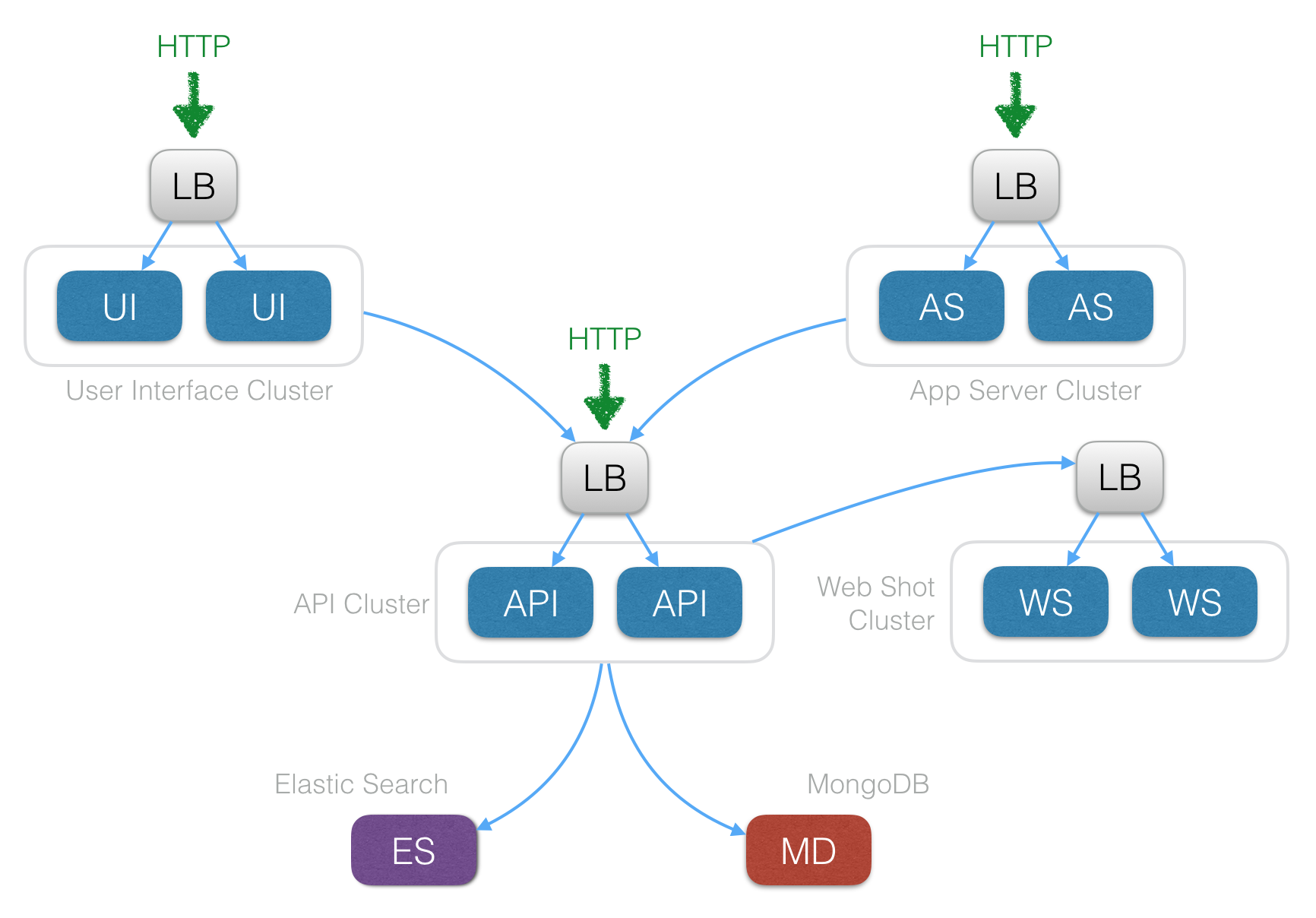

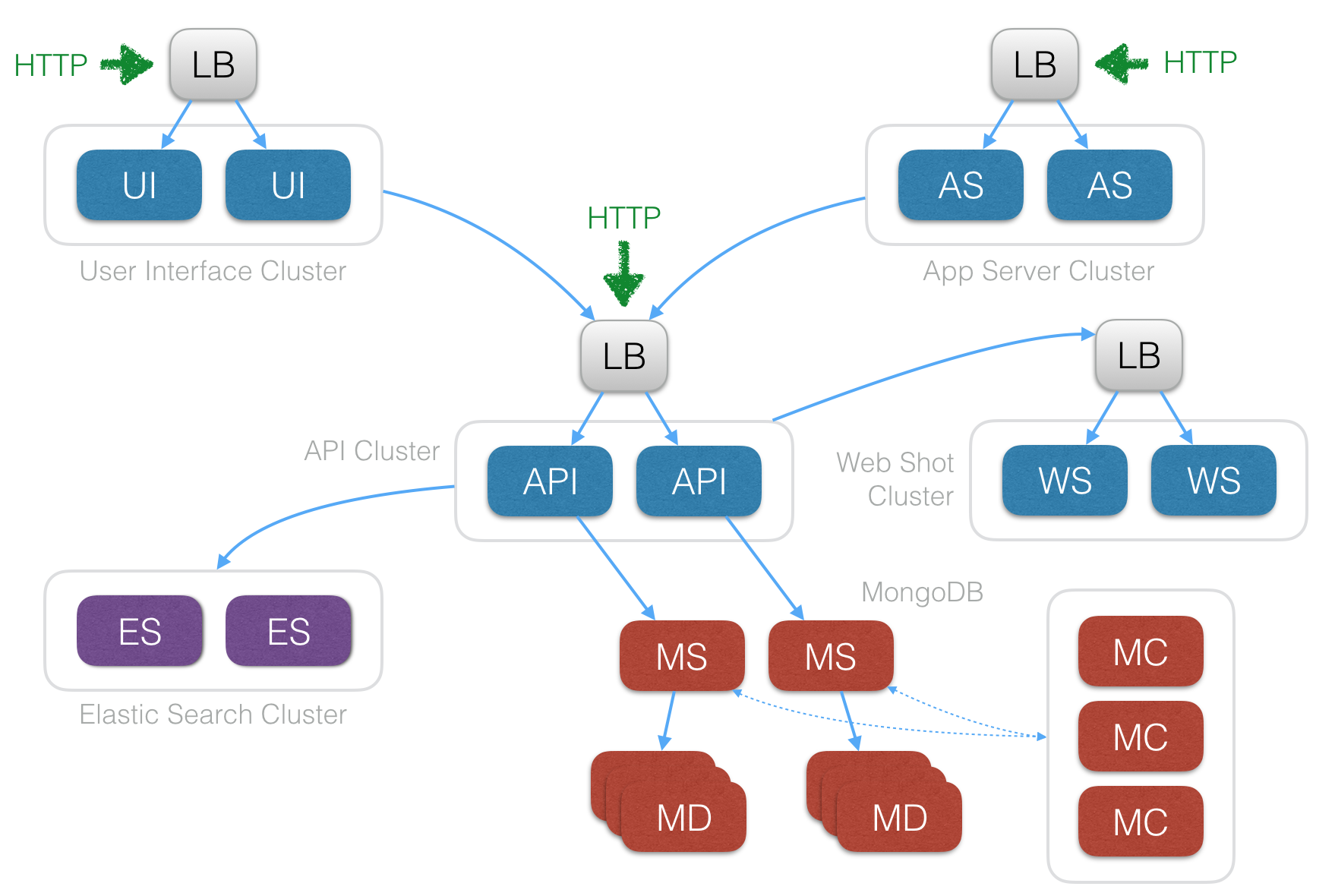

Clustered

This is a production environment where the API servers are clustered. This means that there are multiple API servers running and they're aware of one another. A distributed cache, lock and messaging system operates across the cluster allowing any of the API cluster members to serve requests equally. A load balancer runs ahead of the API servers and distributes requests across them.

In an AWS environment, this is typically an AWS Elastic Load Balancer but in many of the kits, you will see HA Proxy being used.

In addition, the UI tier and Web Shot tier are clustered. In the former case, the underlying cloudcms-server Node module provides Redis support to enable the cluster-wide distributed cache. In all cases, a load balancer runs ahead of the cluster.

The API server is architected to be stateless and fast. API servers are designed to fail fast - they can be spun up or spun down and the cluster automatically detects the new or departing members and redistributes state accordingly.

Clustered (with Mongo DB and ElasticSearch scale out)

This is a production environment where the Cloud CMS database layers are scaled out for redundancy and throughput.

The MongoDB tier is configured to use replica sets and/or sharding. If sharding, separate mongos process and mongoc processes exist to coordinate connectivity to the correct shard and/or replica.

The ElasticSearch tier is also configured for clustering. The clustering mechanics for ElasticSearch are very similar to those of Cloud CMS itself. ElasticSearch servers provide nodes that may join or leave an ElasticSearch cluster at any time. ElasticSearch cluster state is automatically redistributed on the fly.

Clustered (with dedicated API workers)

Another common production environment is one the total set of API workers is partitioned into the following groups:

- Workers

- Web Request Handlers

In this configuration, the load balancer distributes live requests to the Web Request Handlers. The Web Request Handlers may put asynchronous jobs onto the distributed work queue as part of what they do to handle the incoming web request. Workers consist of API servers that are not connected to the load balancer. Instead, they wait for jobs to become available and then work on them.

The distinction between Workers and Web Request Handlers allows you to fine tune your production environment to more finally match Web Request Handler capacity to API traffic demand. These instance types or boxes serving these live web requests will generally require more CPU where as the Workers will generally favor more memory intensive boxes.