Intelligent Agents

AI agents work alongside your teams to help understand your content, search for documents and generate insightful answers.

Publish trained data sets to your vector DBs and customer-facing RAG and Private GPT applications.

Key Features

-

AI Agents

Free up your team. AI Agents understand your content to provide helpful answers and generate solutions while automating time-consuming, repetitive tasks.

-

CMS for RAG apps

Generate data sets to deploy to your custom RAG applications. Train AI and validate with instant preview and scoring. Publish on time with incremental updates to your custom Vector DBs.

-

CMS for PrivateGPT / Custom LLM

Spin up new Amazon Bedrock, Azure or Private GPT to incrementally train your own personal AI assistants with your knowledge base of information and expertise. Deploy in real-time with scheduled publishing.

-

Private and Secure

Own your Data. Run everything within your own containers if you wish. Secure your data so it never leaves your network.

Smarter Content that works for you

AI Agents

Provides help, insight, automation of repetitive tasks and content enhancement with classification and generative AI.

CMS for RAG

Deploy incremental, trained data sets to your custom retrieval-augmented generation (RAG) applications and vector DBs with scheduled publishing.

CMS for Private GPT

Run local LLM or PrivateGPT models and incrementally fine-tune them with trained data sets and custom embeddings as editors work.

Private and Secure

Own your data. Run both Gitana and your custom LLMs on-premise to ensure that all data and all traffic never leaves your network.

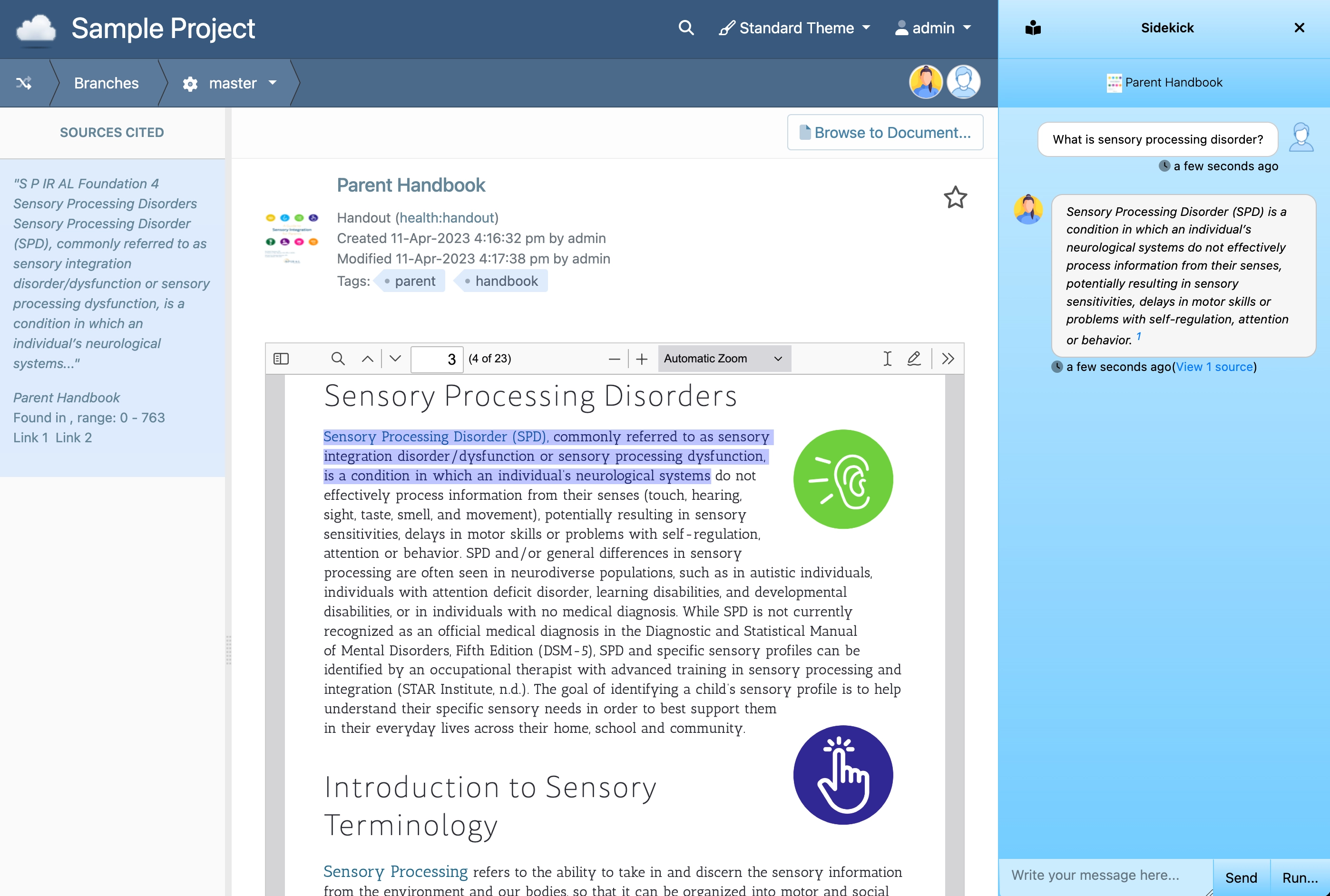

AI Agents

Automated sidekicks free up your team to be more efficient and more creative.

-

Helpful assistants

Free your team from repetitive, time-consuming, heavy tasks. AI agents perform their work in isolated branches and then present that completed work to you for further editing and approval.

-

Conversational Insights (with citations)

Converse with your agents about content. Ask questions and glean insights on a single document or entire collections of documents all at once. Agents provide citations to support from where within your content the answer was sourced.

-

Content Generation

AI agents utilize LLMs, including ChatGPT and/or Private GPT models, to generate new and interesting content for you. They summarize documents, generate questions and answers, produce key insights, extract terms and bullet points and much more.

CMS for RAG apps

Empower your marketing and editorial teams to publish frequently, impactful updates to the content that powers your RAG (retrieval augmented generation) and custom LLM applications.

-

Data Set Generation

Run training to build data sets from your editorial changes. Data sets are trained using server-side scripting. They are a trained truth table of statements to be sent to your vector DB.

-

Test, Preview and Validate

Preview and test your data sets against your custom RAG app vector DBs or LLM fine-tuning endpoints to score them and understand if your dataset effectively trains the endpoint to generate the intended responses. Editors adjust their content to increase the score ahead of go-live.

-

Scheduled Publishing

Gitana automatically deploys incremental, trained data sets to your RAG app vector databases or LLM endpoints. These include customizable embeddings (with the embedding model of your choice), vector sizes and more.

CMS for Private GPT

Deployed trained data sets to private GPT, on-premise custom LLMs and popular cloud-hosted LLMs using fine-tuning.

-

RAG Vector Databases

Gitana drives the vector databases that back your RAG (retrieval augmented generation) applications. Generate trained datasets from content deltas and preview those changes with incremental deployment to staging DBs.

-

PrivateGPT / Local LLM

Connect to your local LLM or Private GPT API endpoints to deliver trained data sets for incremental fine-tuning. Own your source data -- ensuring that it never leaves your network.

-

Fine-Tuning

Incrementally deliver trained data set deltas to your fine-tuning endpoints for popular LLM offerings (such as OpenAI or Claude) as well as custom, on-premise LLMs (such as Llama 3).

Own your Data

Both Gitana and your custom LLM / Private GPT installations can be run entirely on-premise, in the cloud or as a hybrid, best of both worlds approach.

-

Keep it Local

Run both your Gitana and LLM instances on-premise and on the same private network to ensure that your data is entirely secure and never leaves your network.

-

Secure Access Control

Safeguard, control and audit who accesses your content, ensuring robust security and compliance of your information. Enterprise access policies ensure strict, centralized control and accountability.

-

Best of Both Worlds

Connect and integrate to any combination of public or private LLMs, vector databases, private GPT installations or custom on-premise LLMs as you see fit to implement your business requirements.

Automatic Generation of Datasets

Incrementally build and augment your training and validation data sets whenever content changes in your branch. Execution scripts run on the affected content and produce increment data sets that are submitted to your machine learning pipelines.

-

Data Set Generation

Background jobs execute the generation of both training and validation datasets using server-side scripting. Scripts query your content and fetch relational structures from your content graph. Data is normalized and mapped into training completion rows in JSON, JSONL or other required formats.

-

Incremental

As new data appears in the content graph, it is incrementally fetched and compiled and then aggregated into the total dataset. This makes dataset augmentation fast and allows for incremental training and fine-tuning of your AI models as new data is made available.

-

Training and Validation

Training data sets are maintained distinct from validation data sets, allowing for both tuning of your AI models and validation of those models with scoring and feedback needed to calibrate the content model and data set generation heuristics.

Machine Learning Pipelines

Train and validate your AI models using your approved content with continuous training. Datasets are generated and submitted to Machine Learning pipeline providers for integrated testing and validation of your models. Scored results are returned and acted upon to improve your content set, training scripts or graph heuristics.

-

Training ahead of Release

Publishing workflows allow you to schedule content for a future release. Ahead of this release, pre-train a new revision of your model using approved content. Then, on launch, swap the new AI model in for the old one to leverage the new set of inferences.

-

Distributed Training Execution

Training and validation runs as a distributed background job. Keep track of progress and receive notification when training completes. Retrieve results, act on them or advance the publishing workflow for iteration.

-

Branch-based, A/B Testing

Training and validation works hand-in-hand with branches. Create a new branch of content changes and train a branch-specific revision of your AI model with your new content changes. Then dynamically switch models within your app to perform in-application A/B testing to determine which models yield a best fit.

Fine Tune for Continuous Learning

Validation results provide insights into how well your trained AI model's predictions correlated with what you expected. Improve your model's accuracy by fine-tuning your content, graph relationships, weights and training scripts.

-

Scoring

Having trained your model with the training dataset, the validation dataset yields test results to indicate how well your model is trained. See what tests failed and the overall percentage score for your validation run.

-

Fine Tune

Adjust your content properties or weighted graph relationships to tighten the relationships between instances. Optimize your content so that preferences are given to the kinds of outcomes that you expect upon AI model inference.

-

Iterate

With your optimizations in place, re-run the training with a new version of your AI training model. Compare scores to see what improved and whether further investment is needed.

AI Providers

Connect your content publishing and workflow processes to Machine Learning / AutoML providers for automated training, validation and fine-tuning of your content set and AI models ahead of release.

Gitana features pre-built integrations to popular providers or you may connect to your own self-managed pipelines.

Google Vertex AI

Azure ML

AWS ML

OpenAI

Watson ML

Ready to Get Started?

Unlock your data with smart content services and real-time deployment